Edge detection algorithms are not a part of the trivial programs. They comprise of an advanced thinking algorithm and implementation. There are various types of Edge detection algorithms:

1. Laplace : This edge detection Gives the second order differentiation of an image. A very crude one

2. Prewitt: This edge detection is a composition of two edge maps. One is the original image differentiated along the horizontal axis and the other differentiated along the vertical axis. Adding these two as two perpendicular vectors will give the final edge map.

3. Sobel: Similar to Prewitt, but the differentiation technique is different.

4. Canny: The most complex of all the edge detection algorithms. It is considered as the most efficient one.

We will now look at the process of the canny edge detection

Canny edge detection is a four step process.

1. Initially, a gaussian blur is applied to clear any speckles and free the image of noise.

2. Now a Sobel or Prewitt operator is applied to get two edge maps. Horizontal edge map gradx and vertical edge map grady. The edge map is a combination of these two edge maps. Also, The direction of gradient at each point, i.e, the angle is calculated by

theta = arctan(grady/gradx)

Now, since we are interested in only major edges, we will remove edges below a certain threshold.

3. A non-maximum suppression algorithm is applied now. The concept is very simple. For a given pixel, there are 8 neighboring pixels. Hence, there are 4 possible directions, 0 degrees,45 degrees, 90 degrees and 135 degrees.

The angles are hence quantized into these 4 angles(We will later look how we do it in python). Now consider a pixel in the image. Let us assume that the angle of gradient at that point is 0 degrees. This implies that the direction of edge should be perpendicular to it,i.e running along the 90 degrees line. Hence we will check for the left and the right neighbouring gradient values. If the gradient at the present pixel is greater than its left and right neighbours, then it is considered an edge, else it is discarded. This process gives single pixel thick edges.

4. Now a hysteresis based edge scanning is done. It is known that major important edges will be along curves, not as isolated points. A two threshold scanning is done. The high threshold, Th will decide the starting of the edge and the Tl will decide the ending of the edge. Once this process is done, we get a binary map of the edges.

Let us see how it is implemented in Python using scipy. The steps are numbered in the same order as above.

Few points first:

P1.Why should an image be differentiated to get its image? Since, the grayscale value of the image changes much rapidly near the edges than other places. When the gray scale value is almost constant , the differentiation at that pixel will not yield any sharp edges.

P2. We will be using a term called convolution. I will briefly present it, as a tool, not as a concept that is taught for electrical engineers.

Consider a 3×3 matrix | 0 1 0|

| 1 1 1|

|0 1 0|

We know that our image is actually a huge matrix, with each value showing the grayscale intensity. Convlution is running the 3×3 matrix across this image. What does that mean? It means that, The above matrix is centered at every pixel in the image. Now, the 3×3 matrix’s values are multiplied with the correspoding points in the image and added. This result replaces the current value of the pixel. Hence if we want a differentiation matrix ( we will call the multiplying 3×3 matrix as kernel from now), we have:

|-1 0 1|

|-1 0 1|

|-1 0 1|

This is called a Prewitt operator.

Now let us go ahead and do it in python.

1. The code depends on scipy for array operations. First, the image is loaded as a grayscale image array. A gaussian kernel with a chosen standard deviation and kernel size is applied to the image to remove any noise.

2. A Prewitt operator is applied to create two images, horizontally differentiated and vertically differentiated. Let us call them gradx and grady respectively. The gradient map is given by grad = squareroot(gradx^2+grady^2), and the angle of gradient at each point is arctan(grady/gradx). In the code, arctan2 function is used to find angle, since arctan2 also takes care of the signs of the numerator and denominator. arctan2 output runs from -180 to 180. We add 180 further to make the angles go from 0 to 360.

3. Now we quantize the angles to help the non maximum suppression. The division is as follows:

a.0-22.5 or 157.5-202.5 or 337.5-360 => 0 degrees

b.22.5-67.5 or 202.5-247.5 =>45 degrees

c.67.5-112.5 or 247.5 to 292.5 =>90 degrees

d.112.5-157.5 or 292.5-337.5 => 135 degrees.

Having quantized the angles, the previously discussed non maximum suppression algorithm is run to get a single pixel thick edges of the image.

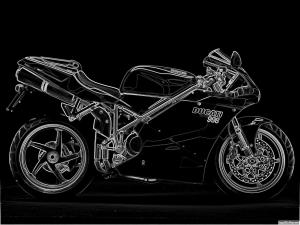

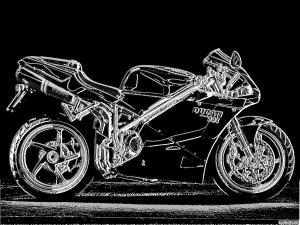

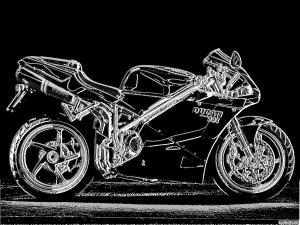

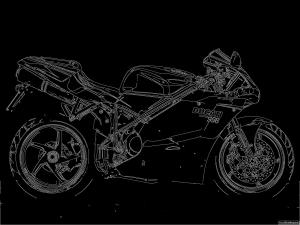

4. The final step is hysteresis edge detection. For our test image, a Th of 50 and Tl of 5 is used. This implies that, an edge is started if the pixel gray scale value is greater than 50 and is terminated when the grayscale value is less than 5. The edges are given white colour and the non edges are left black. The following is the final image obtained.

I have also included the Python code to help you understand better. Please note that you will need python along with scipy to use the module. Also, Python Image Library can be optionally installed to view the image.

I have also included the Python code to help you understand better. Please note that you will need python along with scipy to use the module. Also, Python Image Library can be optionally installed to view the image.

'''

Module for Canny edge detection

Requirements: 1.scipy.(numpy is also mandatory, but it is assumed to be

installed with scipy)

2. Python Image Library(only for viewing the final image.)

Author: Vishwanath

contact: vishwa.hyd@gmail.com

'''

try:

import Image

except ImportError:

print 'PIL not found. You cannot view the image'

import os

from scipy import *

from scipy.ndimage import *

from scipy.signal import convolve2d as conv

class Canny:

'''

Create instances of this class to apply the Canny edge

detection algorithm to an image.

input: imagename(string),sigma for gaussian blur

optional args: thresHigh,thresLow

output: numpy ndarray.

P.S: use canny.grad to access the image array

Note:

1. Large images take a lot of time to process, Not yet optimised

2. thresHigh will decide the number of edges to be detected. It

does not affect the length of the edges being detected

3. thresLow will decide the lenght of hte edges, will not affect

the number of edges that will be detected.

usage example:

>>>canny = Canny('image.jpg',1.4,50,10)

>>>im = canny.grad

>>>Image.fromarray(im).show()

'''

def __init__(self,imname,sigma,thresHigh = 50,thresLow = 10):

self.imin = imread(imname,flatten = True)

# Create the gauss kernel for blurring the input image

# It will be convolved with the image

gausskernel = self.gaussFilter(sigma,5)

# fx is the filter for vertical gradient

# fy is the filter for horizontal gradient

# Please not the vertical direction is positive X

fx = self.createFilter([1, 1, 1,

0, 0, 0,

-1,-1,-1])

fy = self.createFilter([-1,0,1,

-1,0,1,

-1,0,1])

imout = conv(self.imin,gausskernel)[1:-1,1:-1]

gradx = conv(imout,fx)[1:-1,1:-1]

grady = conv(imout,fy)[1:-1,1:-1]

# Net gradient is the square root of sum of square of the horizontal

# and vertical gradients

grad = hypot(gradx,grady)

theta = arctan2(grady,gradx)

theta = 180 + (180/pi)*theta

# Only significant magnitudes are considered. All others are removed

x,y = where(grad < 10)

theta[x,y] = 0

grad[x,y] = 0

# The angles are quantized. This is the first step in non-maximum

# supression. Since, any pixel will have only 4 approach directions.

x0,y0 = where(((theta<22.5)+(theta>157.5)*(theta<202.5)

+(theta>337.5)) == True)

x45,y45 = where( ((theta>22.5)*(theta<67.5)

+(theta>202.5)*(theta<247.5)) == True)

x90,y90 = where( ((theta>67.5)*(theta<112.5)

+(theta>247.5)*(theta<292.5)) == True)

x135,y135 = where( ((theta>112.5)*(theta<157.5)

+(theta>292.5)*(theta<337.5)) == True)

self.theta = theta

Image.fromarray(self.theta).convert('L').save('Angle map.jpg')

self.theta[x0,y0] = 0

self.theta[x45,y45] = 45

self.theta[x90,y90] = 90

self.theta[x135,y135] = 135

x,y = self.theta.shape

temp = Image.new('RGB',(y,x),(255,255,255))

for i in range(x):

for j in range(y):

if self.theta[i,j] == 0:

temp.putpixel((j,i),(0,0,255))

elif self.theta[i,j] == 45:

temp.putpixel((j,i),(255,0,0))

elif self.theta[i,j] == 90:

temp.putpixel((j,i),(255,255,0))

elif self.theta[i,j] == 45:

temp.putpixel((j,i),(0,255,0))

self.grad = grad.copy()

x,y = self.grad.shape

for i in range(x):

for j in range(y):

if self.theta[i,j] == 0:

test = self.nms_check(grad,i,j,1,0,-1,0)

if not test:

self.grad[i,j] = 0

elif self.theta[i,j] == 45:

test = self.nms_check(grad,i,j,1,-1,-1,1)

if not test:

self.grad[i,j] = 0

elif self.theta[i,j] == 90:

test = self.nms_check(grad,i,j,0,1,0,-1)

if not test:

self.grad[i,j] = 0

elif self.theta[i,j] == 135:

test = self.nms_check(grad,i,j,1,1,-1,-1)

if not test:

self.grad[i,j] = 0

init_point = self.stop(self.grad, thresHigh)

# Hysteresis tracking. Since we know that significant edges are

# continuous contours, we will exploit the same.

# thresHigh is used to track the starting point of edges and

# thresLow is used to track the whole edge till end of the edge.

while (init_point != -1):

#Image.fromarray(self.grad).show()

print 'next segment at',init_point

self.grad[init_point[0],init_point[1]] = -1

p2 = init_point

p1 = init_point

p0 = init_point

p0 = self.nextNbd(self.grad,p0,p1,p2,thresLow)

while (p0 != -1):

#print p0

p2 = p1

p1 = p0

self.grad[p0[0],p0[1]] = -1

p0 = self.nextNbd(self.grad,p0,p1,p2,thresLow)

init_point = self.stop(self.grad,thresHigh)

# Finally, convert the image into a binary image

x,y = where(self.grad == -1)

self.grad[:,:] = 0

self.grad[x,y] = 255

def createFilter(self,rawfilter):

'''

This method is used to create an NxN matrix to be used as a filter,

given a N*N list

'''

order = pow(len(rawfilter),0.5)

order = int(order)

filt_array = array(rawfilter)

outfilter = filt_array.reshape((order,order))

return outfilter

def gaussFilter(self,sigma,window = 3):

'''

This method is used to create a gaussian kernel to be used

for the blurring purpose. inputs are sigma and the window size

'''

kernel = zeros((window,window))

c0 = window // 2

for x in range(window):

for y in range(window):

r = hypot((x-c0),(y-c0))

val = (1.0/2*pi*sigma*sigma)*exp(-(r*r)/(2*sigma*sigma))

kernel[x,y] = val

return kernel / kernel.sum()

def nms_check(self,grad,i,j,x1,y1,x2,y2):

'''

Method for non maximum supression check. A gradient point is an

edge only if the gradient magnitude and the slope agree

for example, consider a horizontal edge. if the angle of gradient

is 0 degress, it is an edge point only if the value of gradient

at that point is greater than its top and bottom neighbours.

'''

try:

if (grad[i,j] > grad[i+x1,j+y1]) and (grad[i,j] > grad[i+x2,j+y2]):

return 1

else:

return 0

except IndexError:

return -1

def stop(self,im,thres):

'''

This method is used to find the starting point of an edge.

'''

X,Y = where(im > thres)

try:

y = Y.min()

except:

return -1

X = X.tolist()

Y = Y.tolist()

index = Y.index(y)

x = X[index]

return [x,y]

def nextNbd(self,im,p0,p1,p2,thres):

'''

This method is used to return the next point on the edge.

'''

kit = [-1,0,1]

X,Y = im.shape

for i in kit:

for j in kit:

if (i+j) == 0:

continue

x = p0[0]+i

y = p0[1]+j

if (x<0) or (y<0) or (x>=X) or (y>=Y):

continue

if ([x,y] == p1) or ([x,y] == p2):

continue

if (im[x,y] > thres): #and (im[i,j] < 256):

return [x,y]

return -1

# End of module Canny